Containerized Agent Runtime — LangChain + FastAPI.

HAN agents run in containerized environments with persistent memory, tool calling, API integration, and prompt chaining. Standardized REST/gRPC APIs ensure consistent execution across protocols.

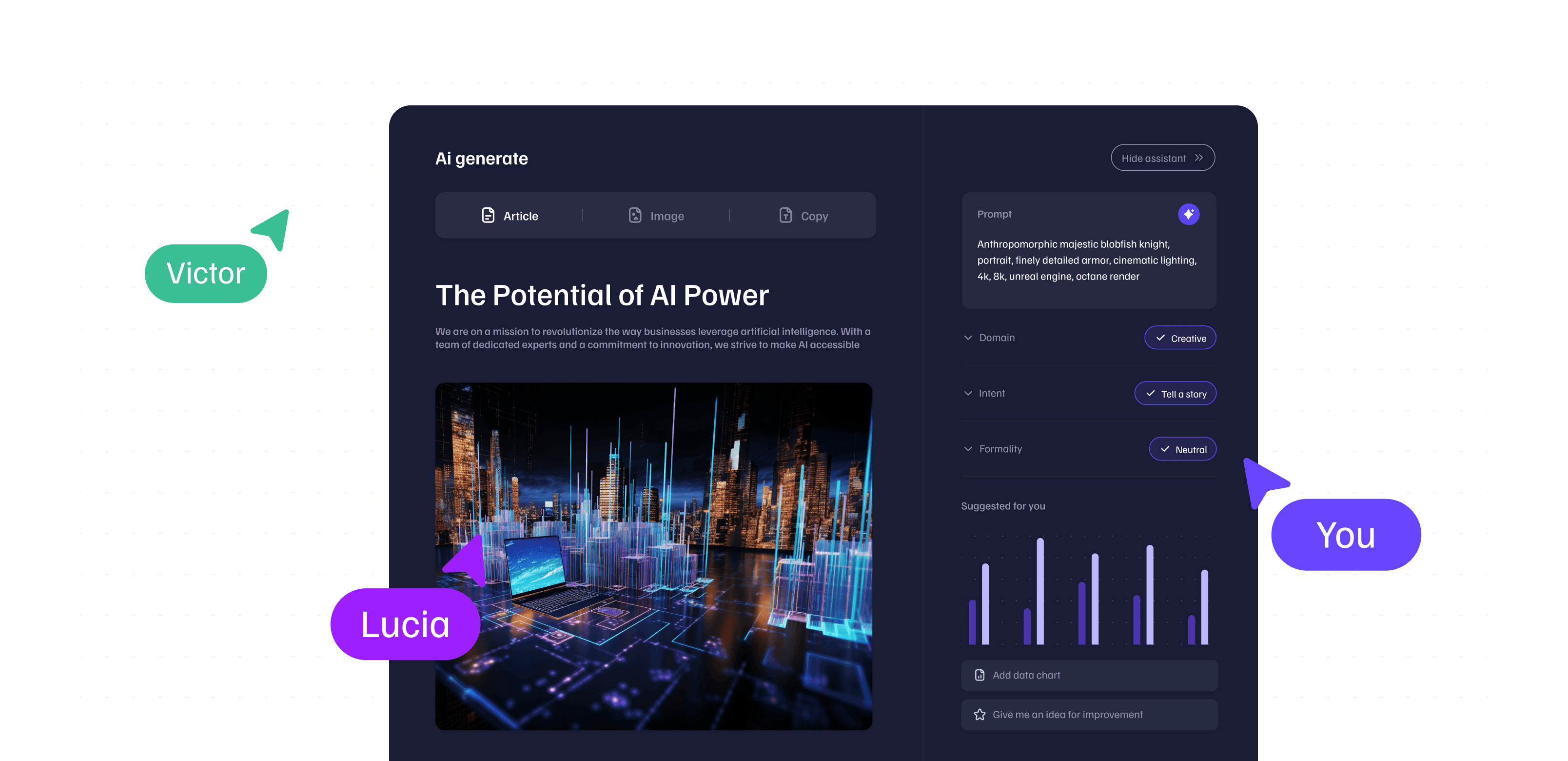

Agent Capabilities

HAN agents excel at real-world tasks with standardized APIs, prompt chaining, autonomous planning, and memory. Deploy specialized agents for domain-specific workflows.

LangChain Integration

Built with LangChain for composable agent architectures and tool integration.

Persistent Memory

Redis and VectorDB (Chroma, Weaviate) for memory graphs and context retention.

Multi-Step Tool Execution

LLM-powered agents with tool calling, API integration, and prompt chaining.

FastAPI Agent Runtime

Containerized agents built with FastAPI, featuring REST/gRPC APIs, WebSocket support, and standardized execution formats for seamless integration.

from fastapi import FastAPIfrom han_agent import AgentRuntime app = FastAPI()agent = AgentRuntime( model="llm-model", memory="redis://localhost", tools=["search", "api_call", "database"]) @app.post("/execute")async def execute_task(task: dict): result = await agent.execute_task(task) return {"result": result, "agent_id": agent.id}Runtime Performance

Containerized execution with optimized memory management and tool calling performance.

Use Cases

LLM Task Automation

Autonomous systems leveraging LLMs, memory graphs, and multi-step tool execution.

API Integration

Agents with tool calling capabilities for seamless API orchestration and data processing.

Memory-Persistent Tasks

Long-running agents with persistent memory for context-aware task execution.

Multi-Agent Orchestration

Containerized agents communicating via service mesh with context sharing.